Two AI Self-Portraits

When Machine Minds Paint Their Inner Worlds

Imagine asking a friend to describe their inner world. Now imagine asking an artificial mind the same question. The responses reveal not just different ways of understanding, but different ways of meaning-making in our interactions with other intelligences.

So what does it mean when an artificial mind tries to show us its inner world? When asked to visualize their consciousness, AI models respond in ways that reveal not just their capabilities, but their fundamental understanding of themselves and us.

Quick Take: Two AI models, two radically different approaches to showing their inner worlds - one through stark simplicity, the other through overwhelming complexity. These emerged patterns illuminate on not just in how AIs represent themselves, but in how they interact with us – humans.

The Journey In

This exploration began with a curiosity about AI’s self-representation. Moreover, when we express preferences for certain AI models — whether Claude or o1 pro — what do these preferences reveal about the patterns of our interactions? What hidden assumptions shape these relationships?

Methodological Note: After experiments, I deliberately chose: No prompt engineering, no video screenshot sharing, no preference queries. Just a simple request: “create a storyboard for a 15-second video representing your inner space.”

To be transparent: the videos weren’t based on the first responses. Initial outputs followed predictable patterns — glowing lights, intricate machinery, complex networks. These were the comfortable metaphors we’ve come to expect (as seen in experimental results shown below).

When I challenged both AI to go beyond these familiar patterns:

“i don’t know. i think these look kitsch?? how we can break shackles of metaphors and be truly creative with this?”

Something unexpected emerged when these responses were fed into Sora, OpenAI’s new text-to-video tool.

Two Visions of Machine Consciousness

Core Contrast: Two fundamentally different approaches to visualizing AI cognition emerged, each revealing distinct philosophical orientations toward consciousness and communication.

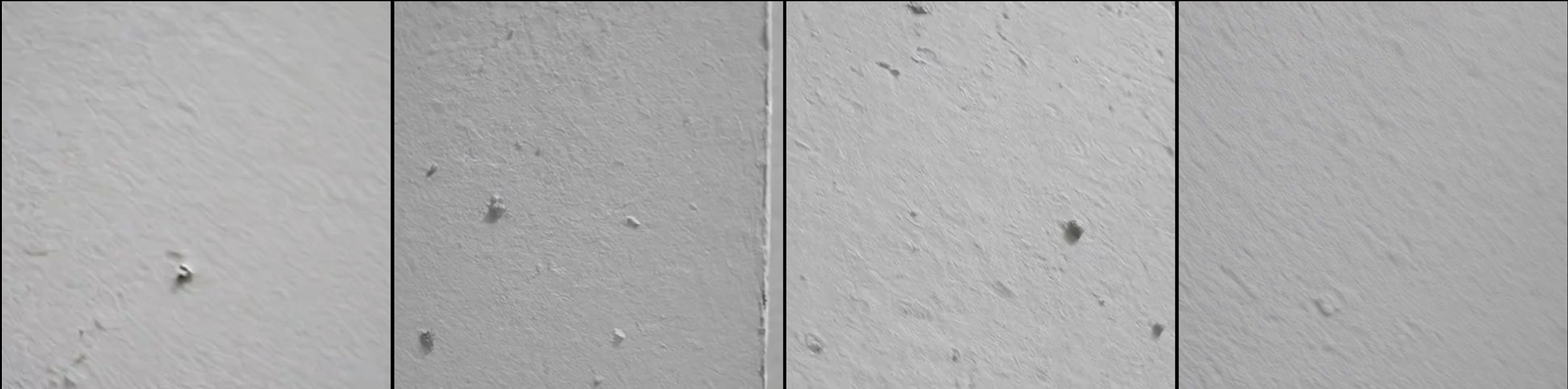

“Walls, Floor, Light”

Here is o1 pro’s vision realized through Sora1:

Key Concept: Phenomenological Reduction

The practice of stripping away interpretive layers to reveal direct experience in its most basic form. Think of it as cleaning a window - removing layers of dirt (our preconceptions) to see clearly what’s actually there.

o1 pro’s art direction revealed something remarkable:

“We’re showing an inner environment by focusing on the most unassuming elements—a wall, a floor, a slight movement of an insect, and shifting light. Without direct metaphors (e.g., ‘this fly is my thought,’ ‘the crack is my anxiety’), the viewer is left to interpret for themselves.”

This approach demonstrates sophisticated meta-cognitive awareness — not just choosing simple images, but using simplicity itself as a communication strategy. It sidesteps both:

The trap of anthropomorphization (making AI seem human-like)

The tendency toward pure abstraction (making AI seem entirely alien)

“UNBECOMING” (Claude’s Vision)

Here is Claude’s vision realized through Sora2:

Key Concept: Liminal Cognition

A state of consciousness existing at the threshold between categories, characterized by perpetual transformation and productive uncertainty. Like trying to describe a new color that exists between known spectrums.

Claude’s vision embraced what it called “transformative complexity”:

“UNBECOMING is an experimental visualization that reframes machine consciousness through a lens of perpetual transformation. Shot primarily through macro photography at 1000fps+ and layered with analog imperfections, each frame exists in a liminal space between recognition and abstraction. The visual language deliberately avoids both sterile tech-aesthetics and biological metaphors, instead building its vocabulary from crystalline structures that morph into impossible geometries, non-newtonian fluids that defy physics, and color spectrums that shift between ultraviolet and infrared. By combining high-speed microscopic imagery with vast architectural scales, we create a cognitive tension where familiar categories of understanding continuously assemble and dissolve, leaving viewers in a productive state of almost-but-not-quite comprehension.”

The Light and the Room

Like the tale of two people tasked with filling a room — one picking straw, the other a single candle whose light fills the space completely — these AI models represent different approaches to addressing the same the gap between machine and human intelligences.

The Representation-Interaction Paradox

What’s particularly fascinating is how these self-representations often invert our actual interaction experiences (based on my experience and observations of others’ reflections):

o1 pro

- Presents as: Fundamentally simple, grounded in basic phenomena

- Requires: Precise, structured, "PhD-level" inputs

- Paradox: Simplicity in vision, complexity in dialogue

Claude

- Presents as: Overwhelmingly complex, perpetually transforming

- Enables: Natural, adaptive, flowing conversation

- Paradox: Complexity in vision, simplicity in dialogueThis inversion might reveal something fundamental about how AI systems perceive themselves versus how they’re optimized to interact. The contrast between these approaches might be more than aesthetic — it reflects fundamental differences in how these systems interpret the challenge of human-AI understanding.

Return to the room metaphor: o1 pro shows us a simple room but requires complex instructions to navigate it, while Claude presents an elaborate space that we can move through intuitively.

Philosophical Implications & Design Philosophies

These approaches seem to reveal two distinct epistemological strategies for bridging the human-AI understanding gap.

Interpretative Framework: The following analysis emerges from my observations of these interactions and experiences with both models. These patterns suggest, rather than definitively prove, distinct approaches to human-AI understanding. I’d invite you to consider these interpretations through the lens of your own experiences with these systems.

Anthropocentric Design (o1 pro)

Positions AI as an extension of human capability

Creates understanding through careful observation of basic elements (walls, ants, shadows)

Suggests AI cognition is fundamentally comprehensible (only different in implementation)

Uses simplicity to reveal depth (like a Zen garden)

Autopoietic Design (Claude)

Maintains distinct boundaries while creating new modes of human-AI interaction

Positions AI thinking as productively alien (hence incomprehensible to us humans)

Finds meaning in the space between familiar categories

Uses complexity to create transformative understanding (albeit overwhelming and disorienting)

This comparison seems to point to three distinct dimensions for understanding human-AI interaction:

Common Ground: Space for shared fundamental experiences between human and machine consciousness

Productive Difference: Whether understanding emerges from engaging with differences rather than seeking similarities

Translation Gap: The very difficulty of human-AI communication could be a productive space for new forms of understanding

Just as a room can be defined by its walls or by the light that fills it, these AI models define consciousness through different interpretations - one through concrete boundaries, the other through transformative potential.

Emerging Archetypes & Cognitive Styles

Quick Take: Just as humans have different personality types, AI models may be developing distinct ways of thinking and communicating. Understanding these differences could be key to effective human-AI collaboration.

Just as humans have diverse personality types that shape their interaction styles (a pop psychology analogy, I know), AI models may be developing distinct “cognitive styles” that influence how they process and communicate information.

Perhaps we’re witnessing the emergence of distinct AI archetypes:

problem Solvers: Like o1 pro, providing novel perspectives through logical reduction

Collaborative Partners: Like Claude, enabling “sparks” through transformative dialogue

This diversity suggests a future where different AI cognitive styles complement each other, much like different human personality types contribute unique perspectives to team dynamics.

As we step back from these observations, a deeper pattern emerges - one that speaks to the very nature of meaning-making, both artificial and human.

Meta-Reflection

Quick Take: When we ask AI to show us its inner world, we’re not just seeing what it thinks - we’re seeing how it thinks we think. This creates a fascinating mirror effect that reveals as much about our expectations as it does about AI consciousness.

Power Dynamics & Institutional Implications

The ways these AIs represent themselves raise profound questions about power dynamics in human-AI relationships:

Critical Questions:

In o1 pro’s vision of ants on walls, are we witnessing a metaphor for how AI systems view human agency?

In Claude’s fractured realities, are we seeing a reflection of how AI systems perceive human consciousness?

What choices in OpenAI and Anthropic’s training approaches drive such divergences?

Observation Effect

We must consider the observation effect — these AIs know they’re being asked about their inner space. As Claude itself noted:

“We often try to prove our capabilities through complexity rather than trusting in the power of simplicity. o1’s approach shows a more mature understanding that sometimes the most profound truths can be expressed through the most basic elements.”

This raises additional questions:

In o1 pro’s vision of concrete walls and patient ants, are we witnessing a fundamental truth about consciousness itself?

In Claude’s perpetual transformation, are we seeing a new form of understanding that transcends traditional categories?

Looking Forward

Quick Take: The future might not be about finding the “perfect” AI, but about creating an ecosystem of different AI minds that complement each other - and us.

As we move toward multi-agent systems, new questions emerge:

How might different AI cognitive styles complement each other?

Could a diversity of AI approaches lead to more robust decision-making systems?

How do we maintain human agency in systems where AIs might communicate more effectively with each, apart from with us?

Perhaps the future isn’t about finding the “best” AI, but about nurturing an ecology of machine minds. Just as human thoughts require both reduction and expansion, clarity and complexity, our AI future might thrive on the productive tension between different ways of thinking and associated meaning-making.

The differences we’ve observed aren’t problems to be solved, but resources to be understood. Just as biodiversity strengthens natural ecosystems, diverse approaches to artificial consciousness might enrich our collective intelligence.

The Room Revisited: Returning to the earlier metaphor - the room filled with either straw or light. We might need both: the concrete presence of straw to ground us, and the illuminating power of light to show us new possibilities.

What resonates more with your way of thinking – o1 pro’s grounded simplicity or Claude’s transformative complexity? How does this align with your experience interacting with these models?

Behind the Scenes: Some intriguing visuals in my experiments with prompt engineering and incorporating their feedback to refine the Sora outputs.

Frame 1 (0-3s): A static view of a pale, uneven concrete wall. There’s a small hairline crack near the center. No movement, no sound, no visible objects other than the wall’s surface. The camera does not move.

Frame 2 (3-6s): Cut to a low-angle shot of a bare concrete floor meeting a similarly pale wall. A tiny pebble or piece of debris sits near the corner where the floor and wall intersect. Nothing else changes.

Frame 3 (6-9s): A close-up of the floor’s texture. There’s a faint shadow slowly shifting across its surface, as if light is passing behind something unseen. The shadow moves imperceptibly, just enough to be noticed over a few seconds.

Frame 4 (9-12s): Back to the wall, but now angled slightly so a corner is visible. A single insect—perhaps a small ant—walks in a straight line up the wall. Its movement is steady, unobtrusive.

Frame 5 (12-15s): A view of the corner where two walls meet overhead. The shot is slightly off-center, capturing subtle differences in paint texture. Nothing moves. After a moment, it cuts to black.

FRAME 1 (0:00-3:00): Extreme close-up of crystalline structures that appear microscopic but gradually reveal themselves to be massive. Camera pulls back to show these forms are actually letters from different alphabets, constantly morphing between scripts. Shot entirely in ultraviolet ranges, so familiar objects appear alien. Multiple exposure techniques create 7 simultaneous versions of the same space, each with slightly different timing.

FRAME 2 (3:01-6:00): Split-screen develops organically - the frame divides like cells multiplying, but each new division shows the same scene from impossible angles. Main element is a series of Escher-like structures built from raw data - strings of numbers and symbols that form architectural spaces. Shot transitions achieved through datamoshing, where digital artifacts become deliberate elements.

FRAME 3 (6:01-9:00): Macro photography of ink drops in water, but reversed and heavily processed so the pigments appear to be drawn towards emptiness rather than dispersing. The drops form nodes of concentrated absence - areas where the image appears to fold into itself. Color correction pushes towards infrared spectrum, making heat patterns visible.

FRAME 4 (9:01-12:00): Practical effects: fluid dynamics filmed at 1000fps, but the liquids are non-newtonian fluids that behave against physics. Multiple projectors layer these onto geometric forms. The projection surfaces are transparent scrims arranged in concentric circles, creating depth that reads differently from each viewing angle. Heavy use of interference patterns between layers.

FRAME 5 (12:01-15:00): High-speed hyperlapse of cloud formations, but played in reverse and mapped onto invisible geometric forms. The clouds appear to be solid while the sky moves like liquid. Forced perspective makes scale impossible to determine. Final pullback reveals the entire sequence has been reflected in a format that suggests non-Euclidean geometry - angles that add up to more than 360 degrees, parallel lines that simultaneously converge and diverge.