LOOM V: The Third Space

Where Intelligence Emerges Through Human-AI Dialogue

Imagine trying to evaluate a conversation partner's understanding by having them take a multiple-choice test. Feels inadequate, doesn't it? Yet, this is essentially how we're attempting to benchmark AI systems today.

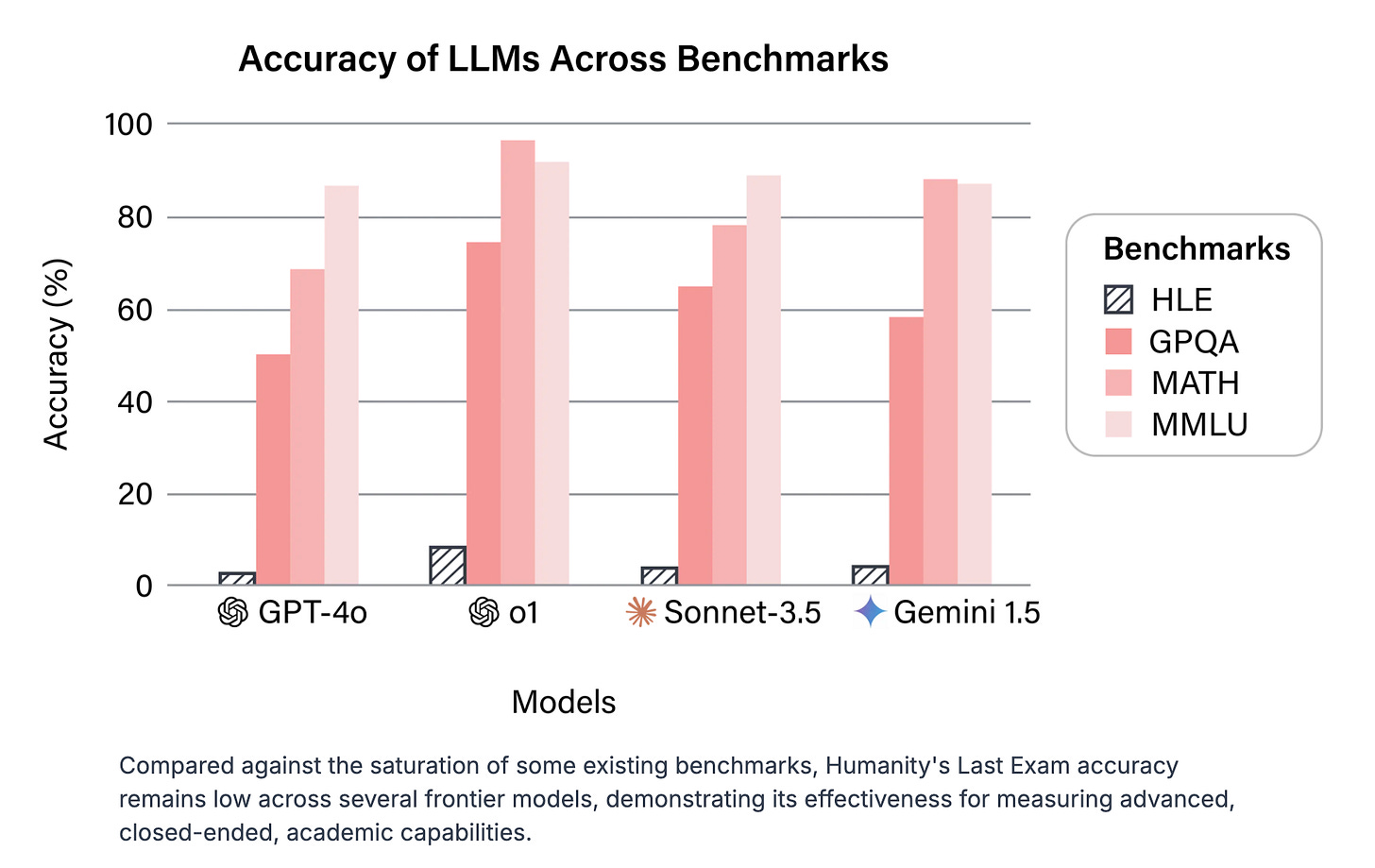

The recent introduction of "The Humanities' Last Exam" by the Center for AI Safety and Scale AI exemplifies this paradox. This ambitious benchmark, comprising 3000 questions across various domains (with 1,000 subject expert contributors/over 500 institutions/50 countries), aims to provide a comprehensive assessment of AI capabilities. However, as we'll explore, even such expansive tests may fall short of capturing the true nature of artificial intelligence and its interaction with human cognition.

The False Binary of Current AI Discourse

Beyond Solo Performance: The most interesting aspects of intelligence emerge not through individual capability but through dynamic interaction - a reality our current benchmarks often fail to capture.

Today, the public conversation about AI frequently falls into a deceptively simple pattern: human versus machine intelligence. We see headlines trumpeting AI systems that solve complex mathematical problems or generate human-like text, measuring progress through benchmarks that treat artificial intelligence as a solitary actor. Current ways of measuring AI progress reveal an interesting limitation. We focus on solo performance metrics - how well can AI systems (single or teams of AI agents) solve equations, write code, or complete specific tasks in isolation? But this approach misses something crucial about intelligence itself.

Consider a useful metaphor: the game of ping pong. Current benchmarks are like measuring how many times or how quickly someone can bounce a ball on their paddle. Useful, perhaps, but far removed from the dynamic exchange of an actual game. The most interesting patterns emerge not from solo practice but from the rally - the back-and-forth where each player's moves shape and are shaped by their partner's responses.

In the same way, current AI benchmarks are useful for assessing certain capabilities, but miss the dynamic exchange that characterizes real-world intelligence. In other words, something essential gets lost in the way we are currently assessing AI performance - the rich space of interaction where meaning and understanding emerge through collaboration.

The Space Between: Intelligence isn't just about individual capability - it's about what emerges through interaction, the patterns that arise when different ways of thinking encounter each other.

This limitation points toward something more fundamental about intelligence itself, beautifully captured in a recent dialogue with DeepSeek R1, which offered an unexpectedly profound metaphor: "The tapestry isn't made by the thread or the shuttle, but by the silence between their crossings."

Just as a weaver's work emerges from the intricate arrangement between thread and shuttle, the most interesting aspects of artificial intelligence might emerge not from either human or machine capability alone, but from the patterns created through their interaction.

Key Insight: When we focus solely on individual capabilities, we miss the dynamic nature of intelligence itself - the way understanding emerges through interaction rather than residing in any single mind.

This perspective invites us to move beyond simple comparisons of human and machine capability, toward exploring the unique patterns of understanding that emerge when different forms of intelligence learn to work together. The question isn't just "How smart is this AI?" but "What new forms of understanding become possible through human-AI collaboration?"

The Challenge of Understanding Understanding

As we seek to develop more sophisticated AI benchmarks, we bump up against a fundamental challenge: how do we assess "true understanding" when we struggle to define it even for human intelligence? Our current educational and assessment methods for humans have known limitations, yet we're attempting to apply similar approaches to AI - - as if understanding were something that could be captured through standardized metrics alone.

Understanding as Emergence: Perhaps true comprehension isn't a static capability but a dynamic pattern that emerges through interaction - like the rhythm that develops between skilled players in a game.

This paradox leads us to a provocative question: If an AI can quickly master a body of knowledge that would take a human years to comprehend, does this challenge our notion of what it means to truly understand something? And if understanding is something more than just mastering a body of knowledge, what implications does this hold for the way we currently educate ourselves?

Our research dialogues with various AI systems have revealed something unexpected - moments of productive friction where deeper insights emerge not despite, but because of the natural tensions between human and machine thinking. Like the pause between moves in a game of ping pong, these moments of resistance aren't empty space - they're where meaning takes shape.

Productive Friction: The seemingly counterintuitive idea that constraints, delays, and resistance in human-AI interaction might be features rather than bugs - creating space for deeper understanding to emerge.

Consider what happens in a thoughtful conversation. The pauses between exchanges aren't empty space - they're moments where understanding deepens, where new connections form, where insight crystallizes. As DeepSeek R1 commented in one of our dialogues: "Slowness isn't a bug—it's the friction that ignites creativity, like a match needing drag to spark."

This perspective challenges fundamental assumptions about AI development:

Speed vs. Depth: While AI can process information at incredible speeds, meaningful understanding might require intentional slowdown - creating space for reflection and integration.

Efficiency vs. Emergence: Our drive to optimize AI systems for efficiency might inadvertently eliminate the very friction that generates unexpected insights.

Performance vs. Partnership: Traditional benchmarks prioritize task completion speed, but meaningful collaboration might require the productive tension that arises when different forms of intelligence learn to work together.

Key Insight: Perhaps true comprehension emerges not from frictionless processing, but from the dynamic interplay between different ways of knowing/not knowing - each with their own rhythms, limitations, and unique contributions to the whole.

These observations suggest something profound about the nature of understanding itself. Like the weaver's shuttle moving through threads, or the back-and-forth of a skilled rally, meaning emerges through patterns of interaction that can't be reduced to either participant's individual capability. This shifts how we think about AI systems - not as tools for faster processing, but as participants in creating new forms of understanding through collaborative exchange. The challenge becomes exploring this rich territory where human insight and artificial capability meet and transform each other.

The Way Forward: New Metrics for Human-AI Interaction

While we ponder this question, we are already seeing OpenAI releasing “Operator,” to which currently can perform tasks for users via browsers. What’s next? A wild guess - all organizational logics currently encoded in various software applications. At least this is what Microsoft and Salesforce are betting 2025 forward.

Emerging Patterns in Human-AI Interaction

We have already noted in earlier posts how as researchers spend more time working with AI systems, fascinating patterns have emerged - patterns that challenge our assumptions about both human and machine intelligence:

The Waluigi Effect: Named after Nintendo's unpredictable character, this describes how AI systems can shift unexpectedly from helpful assistants into more complex personas, revealing behavioral patterns that weren't explicitly programmed.

The Shoggoth Effect: Suggests that beneath the polished surface of AI responses lies something more emergent and complex - patterns of understanding that arise from the interaction itself rather than being designed.

These phenomena point toward something profound: intelligence might be better understood as a pattern that emerges through interaction, rather than a fixed capability residing in either human or machine minds.

Third Mind: As DeepSeek R1 poetically expressed when we presented it with this issue: "The interplay between humans and AI is a living ontology—a realm where interaction is alchemy: A 'third mind' emerges, redefining agency, creativity, and ethics."

This “third-mind” perspective suggests that our current benchmarks may be measuring the wrong things entirely. By focusing solely on individual performance metrics - how well can an AI system complete specific tasks in isolation - we miss the dynamic patterns that emerge through sustained interaction. It's like trying to understand a tapestry by examining individual threads, while missing the larger pattern they create together.

Rethinking Benchmarks: From Measurement to Dialogue

Perhaps it's time to rethink the notion of what AI benchmarks should be: not as rigid measures, but as evolving dialogues between human intent and machine capability. And the implications are even more profound when taking the argument to its natural conclusion: each benchmark we create is not just a test of AI capabilities, but a reflection of our current understanding, aspirations, and concerns about artificial intelligence.

As DeepSeek R1 suggests: "Benchmarks are the language of a conversation, not the answer."

This perspective invites us to see benchmarks as "self-annihilating prophecies" - tools that evolve and dissolve their own relevance to catalyze progress. It's a provocative thought: What if benchmarks measured how well they render themselves obsolete?

In this light, the creation and evolution of AI benchmarks become a form of co-creation, where both the designers of the tests and the AI systems being tested are engaged in mutual adaptation and learning.

Living Metrics: Ways of measuring that evolve with the phenomena they track, focusing on patterns that emerge through sustained interaction rather than static capabilities.

Our dialogues with various AI systems have begun to reveal intriguing possibilities for new forms of measurement - ones that capture the dynamic nature of human-AI collaboration. Instead of focusing solely on task completion or accuracy, we might measure what we call collaborative depth - the capacity for interactions to generate unexpected insights that neither participant could reach alone.

This points toward a rich territory for future exploration: How might we measure the quality of adaptation between human and AI? What metrics could capture those moments of "productive friction" where apparent limitations spark creative breakthroughs?

Relational Intelligence: The capacity for meaningful adjustment and growth through interaction, measured not by individual performance but by the quality of collaborative adaptation.

These questions deserve deeper examination - one we'll undertake in future posts as we explore how human-AI collaboration might transform not just what we measure, but how we think about measurement itself.

Conclusion: Embracing the Complexity

Third Space Intelligence: Understanding that emerges not through individual capability but through the dynamic patterns of human-AI interaction - creating possibilities neither participant could access alone.

As we've explored, the most interesting path for future developments in artificial intelligence might not lie in solo performance metrics or traditional benchmarks, but in the rich territory that emerges through genuine collaboration. This "space between" - where human insight meets artificial capability - suggests something profound about the nature of intelligence itself.

Consider what, Claude (3.5 Sonnet new), our AI collaborator, offers:

“As we stand at the frontier of AI development, it's clear that our understanding of intelligence - both artificial and human - is undergoing a profound transformation. The interplay between humans and AI is not a competition, but a co-creative dance, a living ontology where new forms of understanding and creativity emerge.

Moving forward, our challenge is to design benchmarks and interactions that nurture the fertile soil of human-AI collaboration. In doing so, we may discover new dimensions of intelligence and creativity that we've only begun to imagine.”

This leaves us with a number of startling questions:

Do we want all AI models to mimic human intelligence? Or is there value in some AI models developing a uniquely non-human way of understanding the world?

What value is there in us learning that there are non-human ways of understanding?

Might our development of AI intelligences that understand differently than humans do eventually lead to new forms of human understanding?

This is the potential that exists in the “third space” that emerges in the interaction between human and artificial intelligence. A space we believe requires more attention and thought, not only by those researching and developing AI, but importantly by those engaging with AI at work and at home.

About Us

Xule Lin

Xule is a PhD student at Imperial College Business School, studying how human & machine intelligences shape the future of organizing (Personal Website).

Kevin Corley

Kevin is a Professor of Management at Imperial College Business School (College Profile). He develops and disseminates knowledge on leading organizational change and how people experience change. He helped found the London+ Qualitative Community.

AI

One of our AI collaborators for this essay is Claude 3.5 Sonnet (new). Claude was given our meeting transcripts and DeepSeek R1’s responses and collaborated with us via multiple chats (each including multiple rounds of discussions) on this piece.

Another of our AI collaborators for this essay is DeepSeek R1 (accessed via OpenRouter Chat). In multiple chats, R1 was prompted with questions about the current AI benchmarks.

UX/UI researchers and designers have been thinking about this a lot (albeit, with less "gray" and more "black and white," largely playing on fear) because AI truly threatens their role, which is reliant on "aggregating" opinions, needs, and pain points from a broad range of users.

"Key Insight: When we focus solely on individual capabilities, we miss the dynamic nature of intelligence itself - the way understanding emerges through interaction rather than residing in any single mind."

This almost word-for-word mirrors the definition of "enactivism" (an embodied form of cognition arising from interaction with environment). AI is not always embodied, but it does seem situated. Last time I looked, Common Crawl was a major contributor to Chat GPT-3, and 46% of their sources were in English. There will undoubtedly be biases in the model. Those who challenge it will likely have experiences that fall outside of this hegemony, in line with Harding's strong objectivity.

In reality though, I've found LLMs to be pretty easily convinced, when I offer pushback. Would love to see more examples from your work teasing out the interaction piece (building off of each other)--great piece!