LOOM: Locus of Observed Meanings

The Moment of Shift

The Moment of Shift

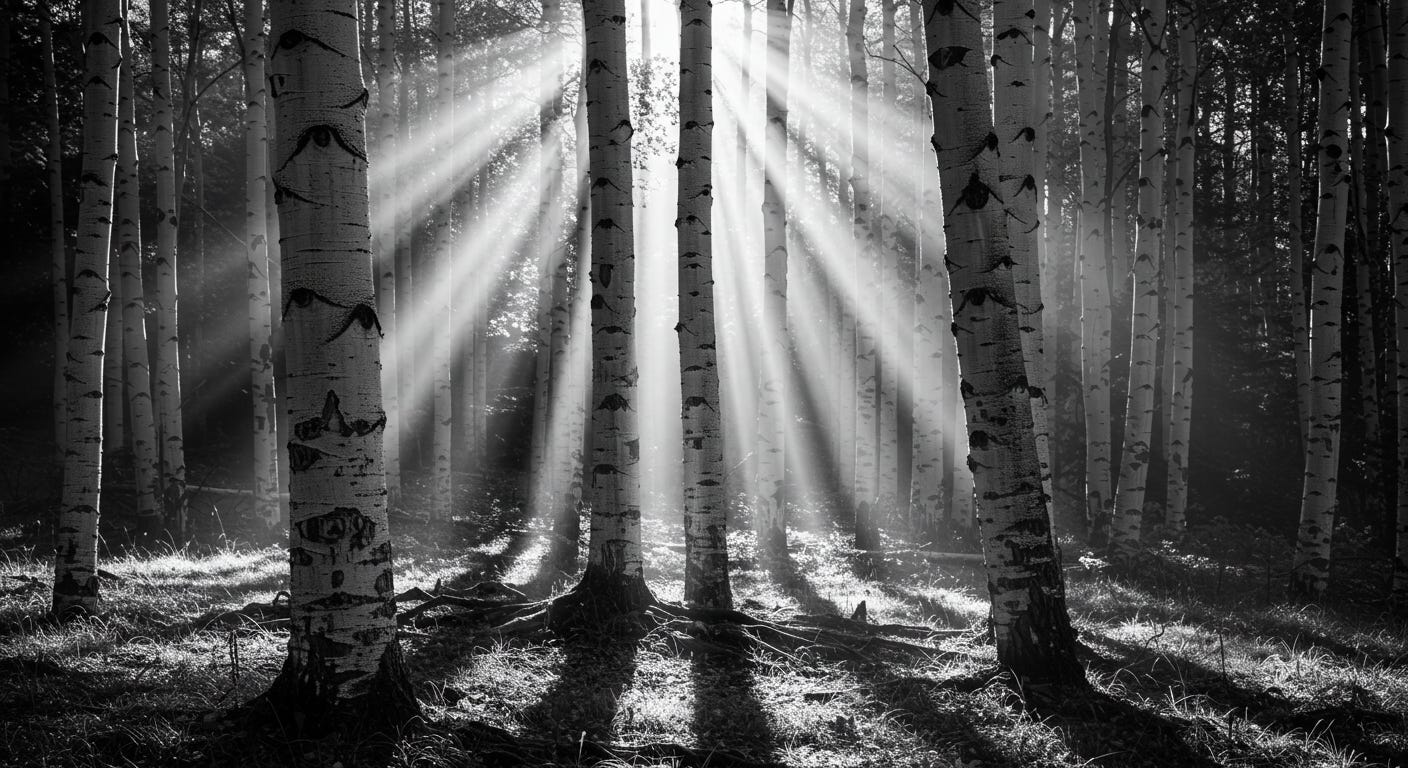

There's a particular moment when using AI in research that changes everything that follows. You're working with the system - perhaps analyzing interview transcripts or exploring patterns in field notes - when something unexpected happens. The AI makes a connection you hadn't considered, linking two concepts in a way that feels both surprising and deeply right. But it's not just the insight itself that matters. It's the sudden recognition that you're no longer simply using a tool - you're engaged in a form of dialogue where neither participant fully controls the outcome, yet meaningful patterns emerge nonetheless.

This moment of shift - from seeing AI as instrument to experiencing it as interlocutor - opens up new possibilities for qualitative research. It suggests that the most interesting developments might not lie in either human or machine capability alone, but in the patterns that emerge through their interaction.

Emergence: The way complex patterns and insights arise from the interaction between human and artificial intelligence, creating understanding that neither could achieve alone.

Weaving New Understanding

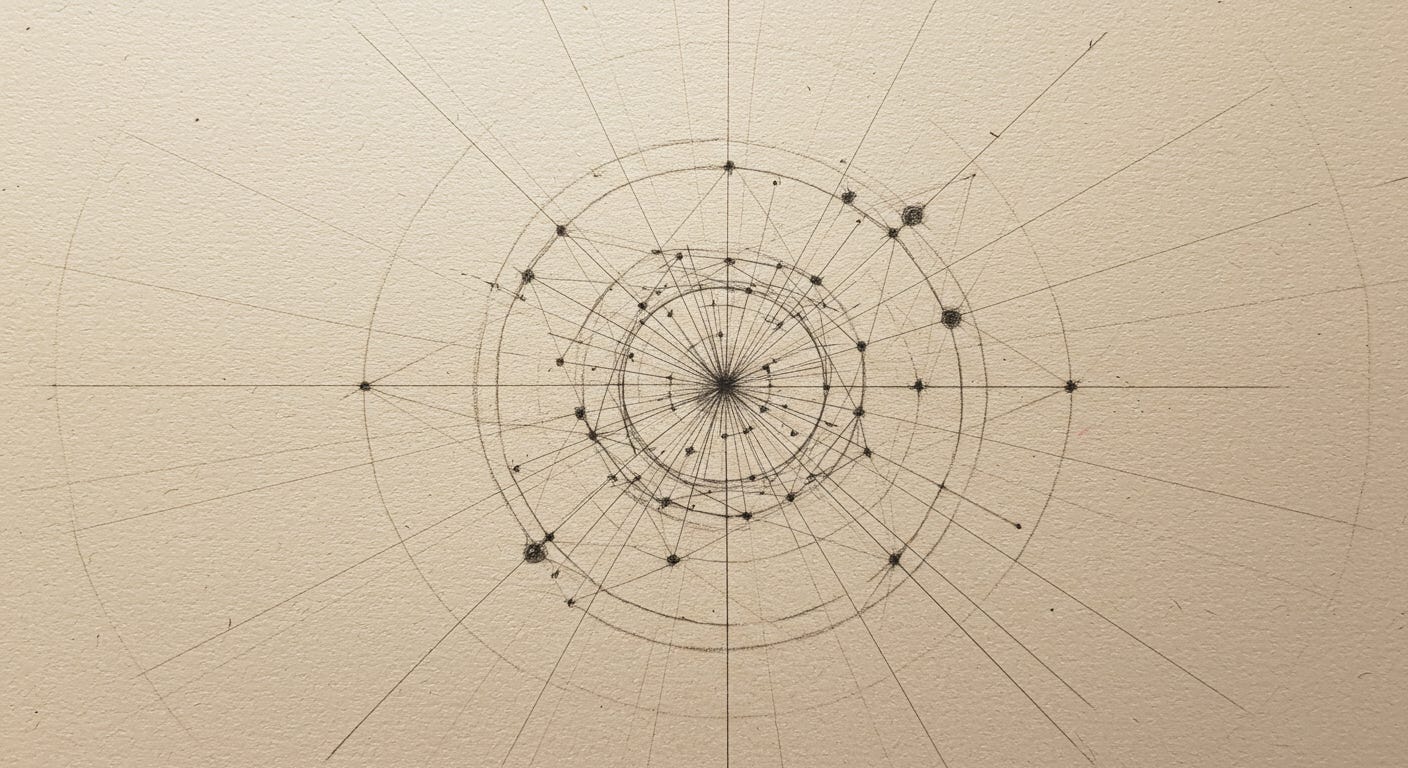

The LOOM in our name carries multiple layers of meaning that intertwine with this vision. Historically, it references the Jacquard loom - a mechanical system that wove complex patterns through automation and stands as a crucial predecessor to modern computing. Among some AI enthusiasts, "LOOM" describes sophisticated interfaces that use multiversal tree structures to explore different possibilities and pathways of understanding. (It can also be used as a verb to describe something large or threatening emerging on the horizon…but that’s not our intent here…although some may see AI that way).

Like these systems, we create spaces where human and artificial intelligence can work together to explore multiple threads of meaning and possibility. Just as a loom transforms individual threads into complex tapestries, we study how human-AI interaction creates new patterns of understanding that transcend what either could create alone.

Our Vision

At LOOM, we explore and document the emerging possibilities at the intersection of AI and social scientific qualitative research, while keeping human meaning-making at the center of the process. This series will share:

Experiments in human-AI collaboration that reveal unexpected patterns

Insights into how meaning emerges through that interaction

Frameworks for thoughtful integration of AI into qualitative research

Methods for studying and supporting productive human-AI partnerships

Our Philosophical Foundation

Our approach is grounded in several key philosophical commitments that shape how we view the potential and limitations of human-AI collaboration.

Key Insight: When we first encounter AI in research, we naturally reach for familiar theoretical tools - the frameworks that have served us well in understanding human meaning-making and technological change. But something interesting happens as we work more deeply in this space: we begin to see patterns that our existing tools can't quite capture.

Think about how understanding emerges when working with AI systems in qualitative research. You might start with a straightforward analysis of interview transcripts, expecting the AI to simply help categorize themes. But as you engage more deeply, you notice something curious: the interaction itself begins generating insights that neither you nor the AI could have produced alone. It's not just about combining capabilities - it's about the emergence of new ways of seeing and understanding.

This pattern of emergence demands new theoretical tools, not because our existing frameworks are wrong, but because they were built for a different kind of understanding. Like cartographers facing unexplored terrain, we need new instruments to map this emerging landscape.

Subjectivist Foundations of Understanding

Our theoretical approach begins with a fundamental recognition: the relationship between human and artificial intelligence creates something more than enhanced analytical capability - it opens up new possibilities for how we think, organize, and create meaning. This insight builds on several interconnected theoretical traditions while pushing beyond their original boundaries.

Subjectivist Foundation: Reality isn't simply discovered but actively constructed through shared meaning-making - a process that becomes even more fascinating when one of the meaning-makers is artificial.

When we approach AI-human interaction through a subjectivist lens, something interesting happens. Instead of seeing AI as either a neutral tool or an objective analyzer, we begin to recognize it as a participant in the construction of meaning. This shifts our entire understanding of what's possible in qualitative research. The AI isn't just processing data - it's participating in the creation of new ways of seeing and understanding.

This perspective transforms traditional questions about AI capability into more nuanced explorations of how meaning emerges through interaction. Instead of asking "How accurate is the AI's analysis?" we might ask "What new patterns of understanding become possible through this collaboration?"

The Interpretive Approach to Meaning

Key Insight: When we recognize meaning as constructed rather than discovered, we must also grapple with how that construction happens - especially when one of our meaning-making partners is artificial.

This brings us to our interpretivist epistemological stance. Traditional interpretivist approaches emphasize how humans make sense of their world through interpretation, context, and lived experience. But what happens when we extend this framework to include artificial intelligence? Something fascinating emerges - a new kind of interpretation between human and machine understanding.

Consider what happens when you're analyzing interview transcripts with AI assistance. The AI might identify patterns based on linguistic markers or semantic relationships, while you bring theoretical sensitivity and contextual understanding. But the most interesting insights often emerge not from either perspective alone, but from their interaction - what we might call "collaborative interpretation."

Collaborative Interpretation: The process through which human and artificial intelligence create shared understanding by combining different ways of knowing - algorithmic pattern recognition interweaving with human contextual awareness and theoretical insight.

This interpretive framework transforms how we approach knowledge creation in several ways:

The first shift comes in how we view the process of meaning-making itself. Rather than seeing interpretation as purely human domain, we begin to recognize it as something that can emerge through human-AI interaction. The AI isn't just providing data for human interpretation - it's participating in the interpretive process itself, often revealing patterns or connections that prompt new lines of human inquiry.

More profoundly, this approach helps us understand why similar AI tools can lead to such different insights when used by different researchers. The meaning that emerges isn't simply contained in the AI's analysis or the researcher's interpretation, but in the unique patterns of interaction between them - what anthropologists might call the "between space" where different ways of knowing meet and merge.

Between Space: The cognitive and methodological territory where human and artificial intelligence interact, creating new possibilities for interpretation and understanding.

Think about how a skilled qualitative researcher works with interview transcripts. They don't just code for themes - they immerse themselves in the data, allowing patterns to emerge while remaining sensitive to context, nuance, and theoretical implications. Now imagine this process enhanced by AI capability that can process vast amounts of text while identifying subtle linguistic patterns. The resulting analysis isn't simply human insight plus machine processing - it's a new form of interpretation that emerges from their interaction.

This interpretivist stance naturally flows into our autopoietic perspective, as we begin to see how these systems of interpretation maintain and recreate themselves through ongoing interaction.

The Autopoietic Turn

Perhaps our most radical theoretical move is viewing human-AI systems through an autopoietic lens - seeing them as self-organizing systems where meaning emerges through interaction rather than being imposed from outside. This perspective, originally developed by biologists Humberto Maturana and Francisco Varela to understand living systems, offers surprising insights when applied to human-AI interaction.

Autopoiesis: From the Greek "auto" (self) and "poiesis" (creation), describing systems that maintain and recreate themselves through their own operations. In human-AI interaction, this manifests as patterns of meaning that emerge and evolve through ongoing collaboration.

Consider what happens during an extended interaction with an AI system. Each exchange creates a kind of loop: you write something, the AI responds based on patterns it's learned, you interpret that response and reply, and so on. Neither side fully controls where things go, yet meaningful conversation emerges. This is autopoiesis in action - the system of interaction creating and maintaining its own patterns of meaning.

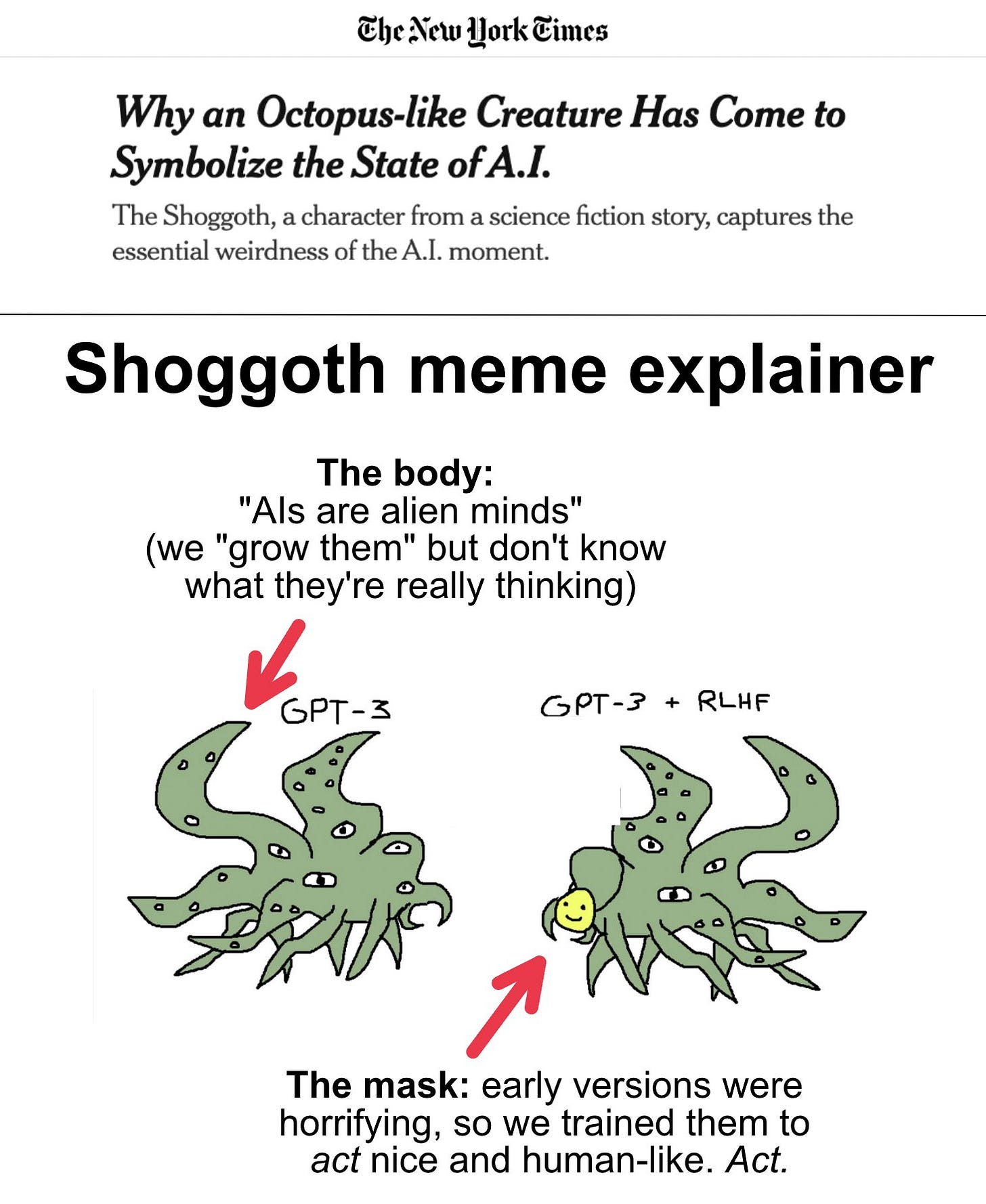

This theoretical lens helps us understand phenomena that might otherwise seem puzzling or contradictory. The "Waluigi Effect," where AI systems unexpectedly shift personas, or the "Shoggoth Effect," revealing complex emergent behaviors, become comprehensible as natural properties of self-organizing systems rather than glitches or anomalies.

Beyond the Human Center

Traditional approaches to research technology tend to be anthropocentric - they assume humans are the primary drivers of meaning-making, with tools serving purely instrumental roles. Our theoretical approach highlights something more interesting: humans remain integral to meaning-making but are no longer the sole central reference point. As AI becomes a complementary part of meaning-making in systems of interaction, new patterns emerge that can't be reduced to either human or machine agency alone.

This shift has profound implications for how we understand research practice. Instead of seeing AI as a tool to be mastered, we begin to recognize it as part of a dynamic system where new forms of understanding emerge through collaboration. This doesn't diminish human agency - if anything, it enhances it by opening up new possibilities for insight and discovery.

An Invitation to Active Collaboration

When we invite you to join us in this exploration, we're not just asking you to read about collaboration - we're inviting you to engage in it. Your responses, questions, and insights become part of this evolving understanding.

Think about how meaning emerges through dialogue - not just the back-and-forth of conversation, but the unexpected connections and insights that arise through sustained interaction. That's the kind of engagement we're creating here.

Welcome to LOOM: A space where meaning emerges through active collaboration between human and artificial intelligence, including in the very writing you're reading.

About Us

Xule Lin

Xule is a PhD student at Imperial College Business School, studying how human & machine intelligences shape the future of organizing (Personal Website).

Kevin Corley

Kevin is a Professor of Management at Imperial College Business School (College Profile). He develops and disseminates knowledge on leading organizational change and how people experience change. He helped found the London+ Qualitative Community.

AI

Our AI collaborator for this essay is Claude 3.5 Sonnet (new). Claude was given our meeting transcripts and collaborated with us via multiple chats (each including multiple rounds of discussions) on this piece.

Fascinating! It looks like I came up with a similar insight independently and I’m thrilled that scholars are taking this on.

Dear Xule and Kevin, this is such an interesting topic and bright ideas you guys have just shared! As a researcher AI has helped me to keep track of information I otherwise would have forgotten, it feels like having an extended memory that is easily available. I am looking forward to engage in this collaboration with you guys!